When developing any modern web application, maintaining blazing-fast speed is critical for a great user experience. To achieve this, non-urgent or computationally intensive operations should always be delegated to the background. These tasks might include everything from generating complex reports and processing uploaded media to running scheduled database maintenance. Effectively managing these hidden processes is a central challenge in building a stable and scalable platform.

The crucial dilemma for development teams is deciding how to execute these necessary tasks without placing a heavy load on the main application server. On one side, you have the familiar control of a classic, always-on server infrastructure. On the other hand, the agile, cost-efficient model of serverless computing promises infinite scalability and a pay-as-you-go financial structure. In this post, we will dissect both the traditional server-based methods and the modern serverless architecture, evaluating their respective pros and cons so you can determine which engine is best suited to your next project’s technical and budgetary needs.

The classic approach: server-based background tasks

The server-based approach is the traditional method where all background operations are managed and executed directly on a dedicated application server. This method gives engineers maximum control over the environment and resources.

Cron jobs: the time-tested scheduler

Cron is the most foundational and time-tested scheduling utility available across all Unix and Linux operating systems. It offers high precision, allowing engineers to set up automated script execution for intervals ranging from every minute to once a year. It remains a reliable option for systems where the infrastructure is already fully in place.

Common scenarios where Cron is an excellent fit:

- Routine database processing: executing queries that periodically clean or process large data sets.

- System backups: automatically creating and archiving essential data files on a fixed schedule.

- Log and file cleanup: deleting old temporary files or rotating logs to free up disk space.

Here is an example of creating a backup using Cron. Adding a task to the scheduler in the console:

crontab -eAt the first launch, the system may prompt you to choose a text editor, for example, Nano.

In the file that has opened, you need to add a single line that defines the schedule and the command to be executed. Suppose we have a ready-to-use backup script located at /home/user/scripts/backup.sh.

This instruction is read from left to right:

30 2 * * * /home/user/scripts/backup.shMeaning of instruction elements:

- 30 — Minutes (0–59)

- 2 — Hour (0–23)

- Day of month (1–31)

- Month (1–12)

- Day of week (0–7, where both 0 and 7 represent Sunday)

- /home/user/scripts/backup.sh — the command to be executed.

Thus, our instruction “Execute the script /home/user/scripts/backup.sh at 2:30 AM, every day, every month, on any day of the week” fully corresponds to this line.

Framework-specific solutions

When the main business logic is written in a language like JavaScript or TypeScript, it feels natural to manage background operations within that same environment. This eliminates the complexity of managing external shell scripts and allows for seamless reuse of existing code and libraries.

For instance, the node-cron library is a simple and widely popular choice for scheduling tasks using classic Cron expressions right inside any Node.js application.

Let’s imagine that we need to send users a news email newsletter every Friday at 16:00:

//npm install node-cron

const cron = require("node-cron");

const sendNewsletter = function () {

//logic of sending

};

cron.schedule("0 16 * * 5", () => {

console.log(`[${new Date().toISOString()}] Start weekly distribution...`);

try {

sendNewsletter();

} catch (e) {

console.error("error when sending a mailing:", error);

}

});Meanwhile, enterprise-level applications built on NestJS benefit from the sophisticated @nestjs/schedule module. This tool offers elegant integration into the framework’s architecture, leveraging decorators and Dependency Injection (DI) to manage scheduled tasks. This level of integration makes it the standard for structured, large-scale systems.

Suppose we need to run a service every day at midnight to aggregate statistics for the previous day and save the report to the database:

import { Injectable, Logger } from '@nestjs/common';

import { Cron, CronExpression } from '@nestjs/schedule';

import { AnalyticsService } from '../analytics/analytics.service'; // Example of another service

@Injectable()

export class TasksService {

private readonly logger = new Logger(TasksService.name);

constructor(private readonly analyticsService: AnalyticsService) {}

// CronExpression.EVERY_DAY_AT_MIDNIGHT -constant

@Cron(CronExpression.EVERY_DAY_AT_MIDNIGHT, {

name: 'daily-report',

timeZone: 'Europe/Kiev',

})

async handleDailyReport() {

this.logger.log('Launching daily report generation...');

try {

await this.analyticsService.generateDailyReport(); this.logger.log('Daily report successfully generated.');

} catch (error) {

this.logger.error('Error during report generation:', error);

}

}

}

The @Cron decorator marks a method as scheduled. NestJS also provides enums like CronExpression.EVERY_DAY_AT_MIDNIGHT for readability.

Serverless execution: from dormancy to action

The term “serverless” simply means developers are relieved of managing the underlying infrastructure, operating systems, or servers. Instead, you deploy code as small, isolated functions, and the cloud provider (like AWS, Google, or Azure) handles the scaling, maintenance, and execution entirely. This model is often referred to as Functions as a Service (FaaS).

The core principle here is that your function remains dormant until a specific external event, known as a trigger, brings it to life.

Event-driven execution

In this model, your code is launched only in response to a particular event (trigger) within the cloud ecosystem. A key difference from traditional architecture is the lifecycle: the function executes, completes its job, and immediately shuts down, releasing all resources. Every time the code runs, it is an independent, isolated, and on-demand process.

Triggers are the crucial link that activates these functions. They can be compared to digital tripwires that initiate your code.

Examples of serverless triggers and their use cases include:

- HTTP Requests (API Gateway): Functions launch instantly to handle standard API calls, perfect for building serverless back-ends.

- File Uploads (S3): A function starts automatically every time a new image or document is added to cloud storage, which is ideal for media processing.

- Scheduled Runs (EventBridge): This is the direct serverless equivalent to Cron, allowing functions to be launched reliably on a fixed daily or hourly schedule.

Using a scheduled trigger, you can, for example, run a function daily at 3:00 AM to perform cache maintenance or generate reports. Since you pay only for the exact seconds the code is running, this approach is extremely cost-effective for periodic tasks.

Serverless functions operate on an event-driven model, turning resource idling into immediate, cost-effective action.

Comparing server-based and serverless: a factor-by-factor analysis

The choice between these two architectures usually boils down to a core decision: having full control over resources or benefiting from the convenience of automatic scaling with low operational costs. Below, we highlight some key business and technical factors for you to consider when weighing these options.

Simplicity of execution

Server-based is, at first glance, the simplest approach. If you already have a server, adding a new cron job takes just a few minutes. A single line in crontab or a few lines of code in a Node.js app — and the task is running. The entry threshold is minimal if the infrastructure is already in place. Serverless requires more effort to set up initially. You need to familiarize yourself with the cloud provider’s interface, configure access permissions (IAM roles), package your code into an archive, and set up triggers. But once that’s in place, creating new functions is quick and repeatable.

Cost structure

With the server-based method, the financial model is fixed: you pay for the server 24 hours a day, seven days a week, regardless of how much work it is actually doing. If your main application server has spare capacity, the background tasks might feel “free,” but you are still paying for the full machine time. The serverless model uses a pay-per-use structure, meaning you are billed only for the real-time execution of your code, often measured in milliseconds. If your task runs for five seconds once a day, you only pay for those five seconds.

Operational reliability

The reliability of a server-based system rests completely with your engineering team. You are responsible for configuring monitoring, failover, and ensuring scheduled processes don’t crash. With serverless, reliability is guaranteed by the cloud provider’s SLA (Service Level Agreement). These platforms are built to be highly available by default, often including built-in mechanisms like automatic retries to ensure a function is called even if a temporary failure occurs.

Serverless transforms the manual burden of failover into automated resilience backed by a service agreement.

Flexibility and control

Server-based gives the user full control. You can choose any language version, install any system libraries, configure environment variables, and optimize the server to your needs. The user is not limited in script execution time or in the resources used. Serverless implies constraints set by the provider. There are strict limits, including maximum execution time (for example, 15 minutes for AWS Lambda), limited available memory and disk space, restricted choice of programming language versions, and strong coupling of code and configuration to a specific cloud platform.

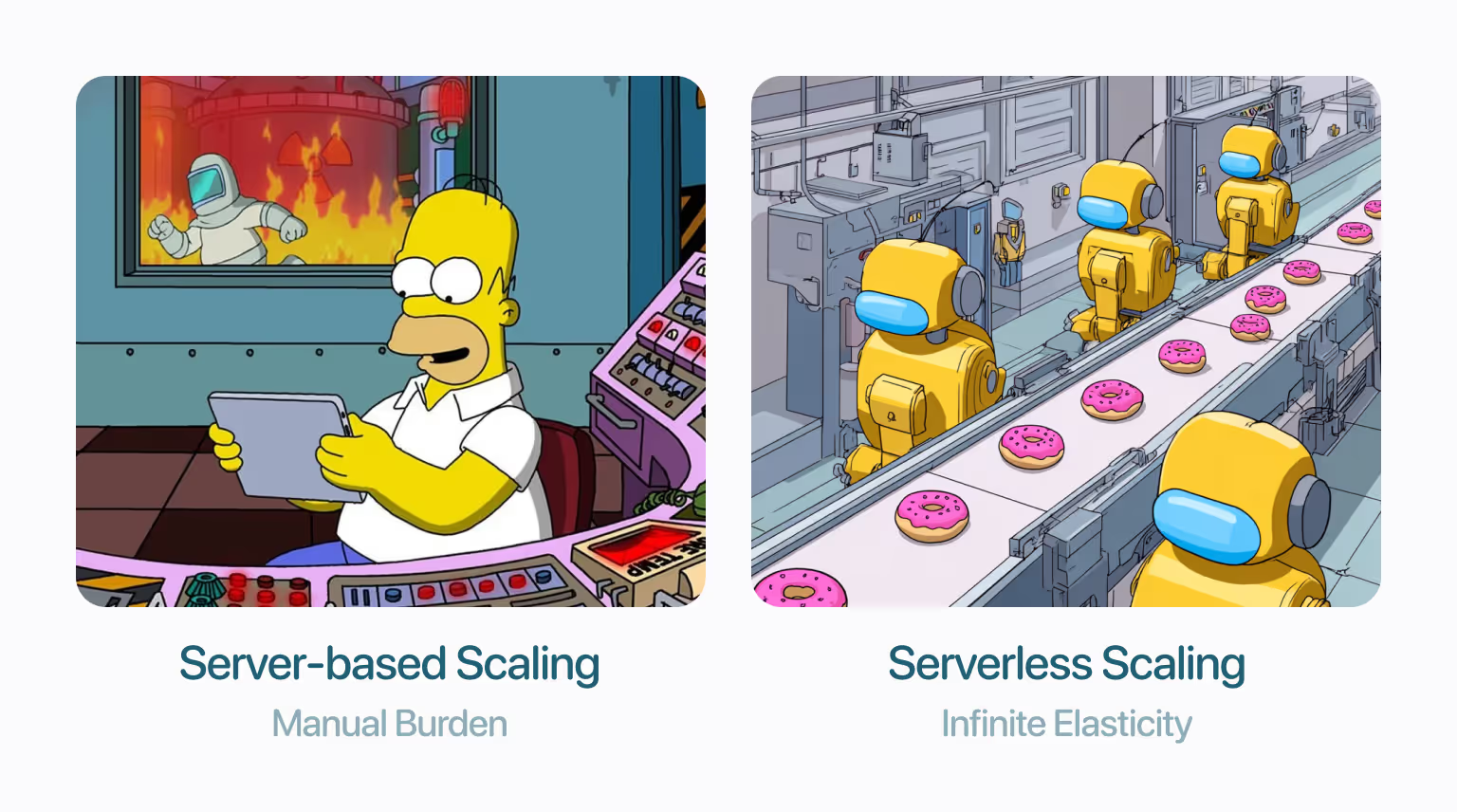

Scaling capabilities

Server-based scaling is often a heavy burden that falls entirely on your development team. If the volume of background tasks surges unexpectedly, you must manually launch additional worker processes or even provision new servers, which demands careful planning and active administration. Conversely, serverless scaling is virtually infinite and instantaneous. If a thousand files are uploaded simultaneously, the cloud platform automatically spins up a thousand parallel instances of your function without any manual intervention from your team.

Finding your balance: the hybrid advantage

The most advanced and robust systems today rarely rely on just one approach. Instead, they intelligently combine the strengths of both, creating a hybrid architecture for maximum efficiency.

For example, a common modern configuration is to run your core, stable, high-performance REST API on a classic, persistent server where you need maximum control. At the same time, you offload all your sporadic, resource-heavy, or event-driven tasks — like a massive data aggregation job or a media processing queue — to serverless functions.

The job of a skilled software architect is not to pick a side but to understand the distinct benefits of each tool. By thoughtfully separating tasks based on their requirements for control, duration, and scalability, you can create a reliable, cost-optimized system ready to handle any load.

So, what’s right for your system?

The right choice depends on the specific nature of your background tasks. Some processes demand full control and unlimited runtime, while others benefit from the elasticity and cost-efficiency of serverless execution. The key is to analyze each task: its expected load, duration, frequency, and tolerance for delays.

With that understanding, you can decide whether it belongs on a persistent server, in a serverless function, or in a hybrid setup that combines both. The result is an infrastructure that scales with demand, minimizes unnecessary costs, and frees your team to focus on delivering real product value — instead of managing servers.

in your mind?

Let’s communicate.