Large language models (LLMs) now write emails, summarize reports, and answer support tickets, but for most people, what happens under the hood is still a mystery. There is also confusion about where these tools excel and where they fall short. Before you integrate an LLM into your workflow, it helps to understand what it does well, how it behaves, and what can go wrong if you treat it like a magic box.

In this article, we provide a clear starting point for comprehending LLMs: how they work, how to adjust them, and how to use models effectively. It’s not a deep, technical manual, but a practical introduction for anyone curious about the functioning of these systems and their applications. Concrete examples are always better than massive theoretical reasoning that focuses on what to strive for, but doesn’t answer the question of how exactly to begin the journey towards the goal.

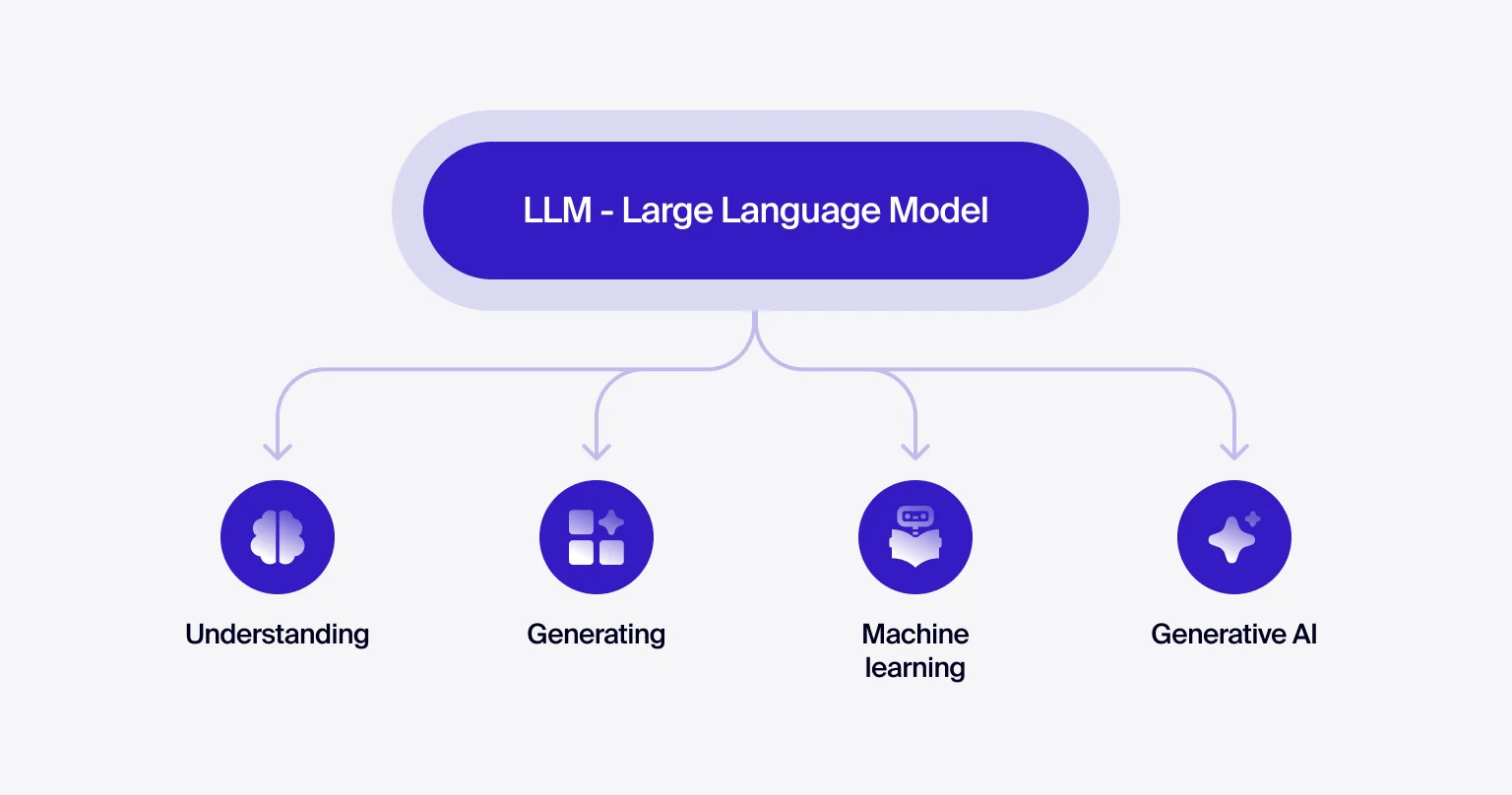

What is an LLM?

Let’s break down what generative artificial intelligence (GenAI) means. “Generative” highlights the core ability of this technology: the creation of content. That includes text, images, video, and more, with some limitations. Here, we’re focusing on text generation.

AI means it was built by people. The “intelligence” part refers to the system’s ability to analyze large datasets, spot patterns, adapt to new input, train from examples, generate new outputs, and respond appropriately to context.

LLMs use human language as both input and output. “Large” points to their scale: typically between 175 billion and 540 billion parameters. A “model” in machine learning is just an algorithm trained to perform a task.

Now let’s explain some technical terms that we will use along the way:

- Parameters are the values in neural networks that change during training to help the model forecast better. There are two key types:

- Weights — show how important each input is to the model.

- Bias — shifts the output to improve flexibility.

More parameters mean a smarter model, but also one that takes more resources to train.

- A neuron is a simple function. It takes input, processes it, and sends the result forward.

Historically, LLMs were based on recurrent neural networks (RNNs). While RNNs were effective at processing sequential data, they struggled with remembering long-range dependencies.

Here’s a small example of how a neuron works using Python:

import numpy as np

X = np.array([0.5, 0.8])

W = np.array([0.7, -0.2])

b = 0.1

relu = lambda x: max(0, x)

output = relu(np.dot(W, X) + b)

print(output)The “Transformer” approach, introduced in the 2017 paper “Attention Is All You Need,” revolutionized text processing. It utilizes a self-attention mechanism, which allows the model to process large volumes of context in parallel, remember distant connections between words, and scale to billions of parameters. Transformers are precisely what became the foundation of modern LLMs, including GPT, BERT, and others.

It’s easy to look at modern AI and see parallels with John Searle’s “Chinese Room” thought experiment, which raises doubts about the possibility of true strong digital intelligence. That experiment envisions a person who, without understanding Chinese, simply follows instructions to manipulate symbols, creating the impression that they grasp the language. Similarly, today’s LLMs don’t genuinely “understand”; they just generate responses based on the statistical patterns from their training data.

However, this comparison isn’t universally accepted. Some researchers propose that even if individual parts lack comprehension, the system as a whole could potentially develop it once it reaches a certain level of complexity. Think about the human brain: certain neurons don’t “understand” anything on their own, yet the collective interaction gives rise to consciousness. From this viewpoint, if artificial neural networks become complex enough and can generalize knowledge effectively, they might not just mimic understanding, but approach it.

How LLMs operate

An LLM is a complex mechanism designed, among other things, for text generation. To generate something, the model needs to understand what exactly it’s supposed to create. Let’s try to break this process down step-by-step:

- Tokenization: The input text is split into small chunks called tokens. For example: “I love programming” → [I, love, program, ming] → [738, 928, 2143, 5732]. A token is the smallest unit of text with a unique numeric identifier in the dictionary, which is generated during an LLM training, and that the model can process. This opens the door to processing the text as a sequence of numbers. Each token has its own probability (weight) of use, which helps an LLM choose the most likely options.

- Vectorization: Each token is converted to vector format (embeddings). Embeddings are a multidimensional space in which similar words have similar coordinates. For example, instead of numeric token IDs, such as [738, 928, 2143, 5732], each token is represented by a vector: [[0.12, 0.85,...], [0.56, 0.34, ...], ...]. This vector representation is then passed to the transformer.

- Transformer: Neural network architecture that processes data sequences using the self-attention mechanism. Such a mechanism allows you to take into account the context of each word in the entire sentence, and not just the nearest words. For instance, in the phrase “the cat sat on the mat”, the word “cat” has the greatest impact on “sat”, so an LLM gives it the most weight (for example, 0.5), and other words are given smaller values, which add up to 1.

- Softmax: Converts a set of numbers into probabilities, helping you choose the next token. It operates exponentially, amplifying the most likely options and reducing the probability of less likely ones. This allows an LLM to give numerical estimates. Besides, in this case, the model can choose the most likely token among several possible options. Softmax is also used in the self-attention mechanism to determine the importance of each word in context.

- Generation: After an LLM selects the next token, this token is added to the entered context. Then the process is repeated. This continues until the maximum token limit is reached or for as long as an LLM determines that generation is complete. A special “stop token” is used to finalize the process.

- Positional encoding: Since the transformer’s architecture does not have a built-in mechanism for processing sequences in the traditional sense, as is implemented in RNNs or long short-term memory (LSTM), it requires positional encoding. This encoding is added to embeddings. As a result, an LLM can effectively distinguish the word order in a sentence and understand which word is in which place.

LLM parameters

Large language models often produce responses that seem plausible, but aren’t grounded in facts. This phenomenon is known as hallucination. It typically occurs when generation parameters are set too loosely, prompting the model to prioritize creativity over accuracy.

To better control the behavior of an LLM and reduce the risk of hallucinations, it’s essential to understand and tune the key parameters. Here’s an example of a typical setup using the OpenAI API:

import openai

model_name = "gpt-4o"

temperature = 0.7

top_k = 50

top_p = 0.9

frequency_penalty = 0.2

presence_penalty = 0.3

max_tokens = 500

response = openai.ChatCompletion.create(

model=model_name,

messages=[{"role": "user", "content": "What is an LLM?"}],

temperature=temperature

top_k=top_k,

top_p=top_p,

max_tokens=max_tokens,

frequency_penalty=frequency_penalty,

presence_penalty=presence_penalty,

)Let’s walk through each of these parameters and how they shape the model’s output:

- Temperature: This parameter determines the level of an LLM’s “creativity”. The lower the temperature, the more predictable and reliable the responses. However, excessively high temperature values can cause an LLM to “hallucinate”. When the model generates text, it predicts probabilities for the next token. Temperature affects the distribution of these probabilities:

- 0.7 - 1.0: Responses are reaching the peak of creativity and unpredictability, even with the same input data. However, the risk of “hallucinations” increases.

- 0.2 - 0.5: Responses become more predictable and factual, with little creativity. The risk of “hallucinations” is reduced.

- 0: An LLM selects the most likely token, which makes the results deterministic. For identical queries, the semantics of the responses will be very similar. The risk of “hallucinations” has been reduced to almost zero.

- Top-k: This is a number that determines how many of the most likely tokens will be included in the array from which the next one is selected. An LLM sorts future tokens by probability from higher to lower and cuts off the part with a lower indicator. For example, if the model should continue the expression “on burgers, I like to add”, and the likely tokens are: [ketchup (0.2), mustard (0.1), onion (0.05), pickles (0.04), butter (0.02)], then with Top-k = 2, the choice will be limited between [ketchup (0.2), mustard (0.1)].

- Top-p: This parameter determines which tokens should be considered when selecting the next one. The model calculates the probability for all possible tokens. Then an LLM selects the minimum number of tokens whose sum of probabilities exceeds the top-p threshold, and selects the next one from this array:

- If Top-p = 1.0, then all tokens are taken into account.

- If Top-p = 0.1, then only 10% of the most likely tokens are taken into account.

- Even if one token has the highest probability, the model can still choose another one if there is enough randomness.

- Frequency penalty: Restricts an LLM from using the same words. The higher the corresponding indicator, the fewer repetitions.

- Presence penalty: Encourages an LLM to use new words. It prevents the utilization of already used tokens.

- Max tokens: Controls the volume of output tokens. The higher this indicator, the more voluminous the response will be.

Prompts: approaches and strategies

The effectiveness of LLM’s response often depends on how the prompt is written. The structure, clarity, and context of your input directly affect the quality and usefulness of the output. A clear prompt reduces ambiguity and steers the system toward the goal.

Picture it as a dependable assistant with no intuition. It follows instructions exactly but won’t grasp vague phrasing, subtle cues, or unspoken context. Use precise and logical wording, accompanied by relevant examples. That’s how LLMs work and why good prompt design is essential.

Basic methods of prompt engineering

First, let’s clarify some common terms used in prompt engineering:

- Prompt — the instruction or request given to an LLM to generate a response.

- System prompt — a hidden directive that shapes how the system behaves. For example: “Always respond in English.”

- Context — the information the system leverages to form its answer. This includes the system prompt, any previous exchanges, and the user’s latest message.

- Context window — the maximum number of tokens an LLM can process at once.

- Completion — the final response the system returns after processing the prompt.

Zero-shot prompting

This method involves asking the system a question or giving a task without examples or added context. It works well for simple, general queries, but may generate vague or unexpected results when the task is more complex.

Example:

Write a short story about a person who discovers a hidden treasure.

Few-shot prompting

In such a case, the prompt includes a few examples to guide the format or style of the desired response. This is helpful when the task has specific requirements or requires a particular tone.

Example 1:

Product: “Wireless Mouse”

Description: “A high-quality wireless mouse that offers precision and comfort for long hours of use. It is ideal for both work and play.”

Example 2:

Product: “Bluetooth Speaker”

Description: “A portable Bluetooth speaker that delivers clear sound and powerful bass, perfect for outdoor adventures.” Now, write a description for the product “Smartphone Stand.”

In-context learning

This method relies on examples within the prompt to teach the system how to perform a task, without retraining.

Example:

input: 12-12-2022

output: 12:12:2022

input: 13-01-2023

output: 13:01:2023

If you now enter: 24-08-2019, the expected output is: 24:08:2019.

Technique can also include role specification:

Imagine you are an English teacher. Explain the word “resilient” to a student who is just starting to learn English in simple language.

Chain-of-thought prompting

This approach encourages the system to explain its reasoning step by step. Instead of jumping straight to the final answer, it lays out the logic behind each decision. This helps improve accuracy in tasks that involve calculation or analysis.

Example:

Calculate the total cost of a shopping list. First, calculate the cost of each item. Then, sum them up to get the total.

Items:

- Apple - $2

- Banana - $1.5

- Orange - $3

First, calculate the cost of each item:

Apple: $2

Banana: $1.5

Orange: $3

Now, sum them up to get the total: 2 + 1.5 + 3 = $6.5

Self-consistency

This method involves generating several possible answers and selecting the one that appears most often.

Example:

What is the capital of Japan?

Answer 1: Tokyo

Answer 2: Osaka

Answer 3: Tokyo

The answer that appears twice is more likely to be correct — Tokyo.

This reduces the chance of random mistakes and increases the reliability of results.

Multitask prompting

A single prompt includes multiple tasks, and the system is expected to complete each one.

Example:

Task 1: Translate “Hello” into Spanish.

Task 2: Write a sentence with the word “Hello.”

Now, complete both tasks.

Interactive prompting

You guide the system in stages, adjusting or clarifying your request during the exchange.

Example:

You: What is the capital of Italy?

System: Rome.

You: Can you also tell me about the history of Rome?

Prompt engineering with constraints

In this case, you give the system specific rules about how to respond.

Example:

Write a short story about a knight in 100 words, with a heroic tone.

Contextualized prompting

The prompt includes extra background, which helps the reply fit the situation better.

Example:

You are a history teacher explaining the French Revolution to high school students. Can you summarize the key events?

Bias in outputs

This method steers the reply in a certain direction on purpose.

Example:

Provide a scientific explanation of gravity. Focus on how gravity operates on a planetary level.

LLM actualizes a very simple but valid truth — the answer reflects the question. The correct request gives the correct result.

Prompt engineering best practices

Crafting effective prompts is essential for obtaining precise and meaningful outcomes. This section outlines strategies that empower you to combine diverse methods and issue clear, decisive commands to guide an LLM. To get a good result, follow these suggestions:

- Mix strategies when needed. If your request involves multiple layers, such as generating structured text or reasoning through several steps, blending methods like chain-of-thought and few-shot prompting can enhance the output.

- Be clear and specific. A clearly formulated prompt helps avoid ambiguity and guides the model toward the desired outcome. Using specific examples and limiting superfluous information also improves prompt effectiveness.

- Treat the prompt as an instruction. The system responds best to straightforward phrasing. Skip the conversational tone, think like you’re writing a command, not chatting.

- Test and refine. If a prompt doesn’t work well, tweak it. Try different phrasings, formats, or examples to get closer to what you need.

- Use structure when possible. Lists, bullet points, and formatting cues often make your intent clearer.

- Track versions. Keep a record of changes to your prompts. Versioning helps track what works, troubleshoot regressions, and scale your approach.

Example:

prompt_versioning/

├── prompts/

│ ├── v1/

│ │ └── prompt.txt

│ ├── v2/

│ │ └── prompt.txt

│ └── v3/

│ └── prompt.txt

├── main.py

└── .gitignore

import openaidef get_prompt(version):

"""Gets a prompt from a file."""

with open(f"prompts/v{version}/prompt.txt", "r", encoding="utf-8") as f:

return f.read()

def generate_response(prompt):

"""Generates response using OpenAI API and gpt-4o model."""

response = openai.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "Ти - корисний асистент."},

{"role": "user", "content": prompt}

],

max_tokens=200,

)

return response.choices[0].message.content.strip()

if __name__ == "__main__":

version = 3 # Selecting a prompt version

prompt = get_prompt(version)

response = generate_response(prompt)

print(f"Prompt (версія {version}):\\n{prompt}\\n")

print(f"Response:\\n{response}")RAG: generating answers with external data

Retrieval-Augmented Generation (RAG) is a method that combines external information retrieval with text creation. Unlike standard LLMs, which respond based only on what they learned during training, RAG can incorporate up-to-date data from external sources. This makes its responses more accurate and relevant, especially in fast-changing domains where it’s not enough to rely solely on pre-trained knowledge. RAG uses external content to improve the response. This helps the system give more precise and current answers.

How RAG operates

1. Retrieval. When a user asks a question, the system first searches external sources like databases, documents, or websites. It can use several types of search:

- Vector search: finds texts with similar meaning.

- Keyword search: looks for documents with exact terms from the query.

- Hybrid search: combines both to increase accuracy.

2. Augmentation. The system adds the retrieved text to the original user query, building an expanded context. This context is passed into an LLM.

3. Generation. The model generates a response using both its internal knowledge and the new, retrieved data.

RAG architecture

1. Preparing the Data. The first step is to collect and clean your data, then structure and index it in a format suitable for search. This often involves using vector databases, such as Pinecone, Milvus, or Weaviate. PGVector can be utilized to add vector search capabilities to PostgreSQL. The accuracy of the search process depends heavily on how well the data is indexed.

2. Retrieving Relevant Information. At this stage, the system searches for the most pertinent content in the vector store. Some tools, such as Pinecone, provide built-in search functions. Others may require additional configuration. The objective here is to extract the fragments most relevant to the user’s query.

3. Generating the Response. Once the system has the context, it passes both the user query and the retrieved content to an LLM. The relevance of the received data directly affects the clarity and accuracy of the output.

RAG: strategies

For RAG to work effectively, simply integrating external sources isn’t enough — it’s crucial to adopt the right approach for organizing, searching, and processing data. Below, we’ll explore the main strategies that enhance each stage of this process, from indexing and searching to ranking and generating responses.

Indexing strategies

Indexing is how data gets organized so it can be searched and retrieved quickly. This is a key part of any RAG system, where speed and relevance matter when pulling documents for a response.

Vector indexing

It uses embeddings and vector databases like FAISS, Weaviate, Milvus, or Pinecone to run semantic search.

How it operates:

- Documents are converted into vectors using an embedding model (e.g., OpenAI embeddings).

- These vectors are saved in a database.

- Incoming queries are also turned into vectors for comparison.

Works well when meaning matters more than exact wording.

Text-based indexing

This method splits documents into individual terms (words or phrases) and builds an inverted index. Each term links to a list of documents where it appears.

How it operates:

- The document is broken down into terms.

- Each term is mapped to every document that contains it.

- At query time, the system looks up relevant terms and ranks the results.

Works best when exact keyword matching is needed.

Hierarchical indexing

This method groups documents into structured categories. For example, data might be sorted by topics like “Medicine,” “Law,” or “Finance.” It becomes useful at scale when the volume of content is high and needs structure to remain searchable.

Chunking strategies

Chunking is the process of breaking a large text into smaller pieces so they can be indexed and used for generation. The goal is to find the right balance — each chunk should include enough information to be useful without becoming too broad or noisy. Good chunking improves search, reduces irrelevant data, increases precision, and helps work around the limits of a context window.

Fixed-length chunking

The text is split into chunks with a fixed number of tokens, typically between 256 and 512. This method is simple and easy to apply during preprocessing.

Sentence-based chunking

The document is split into sentences. It keeps chunks semantically consistent and is also easy to implement.

Paragraph-based chunking

Chunks follow paragraph boundaries. This creates more contextually rich segments but isn’t ideal for all types of documents.

Semantic chunking

The method uses models like Sentence Transformers to cut text into sections based on meaning rather than structure. It’s more complex but can generate higher-quality chunks.

Overlapping chunking

A key feature of this approach is that part of the previous chunk overlaps with the next, meaning it’s partially duplicated. This helps maintain cohesion between the fragments.

Query optimization strategies

The quality of a user’s input directly affects the quality of the response. Small improvements in phrasing can lead to better, more accurate results.

Query expansion

This strategy involves adding related terms, synonyms, or relevant keywords to broaden the search scope.

Original query: How does artificial intelligence operate?

Expanded query: How artificial intelligence operates, principles of AI, what is artificial intelligence, its basics, and algorithms?

Query rewriting

This approach reformulates the input to improve clarity or increase the chances of retrieving useful information.

Original query: How does artificial intelligence operate?

Rewritten: How does AI function? Operating principles of artificial intelligence? What is AI, and how does it operate?

Query сorrection

This strategy fixes typos, word errors, or grammar mistakes that might interfere with search accuracy.

Original query: How does artificial intelligence operate?

Corrected version: The query is already fine. But if it had spelling or syntax issues, the system could adjust it automatically.

Retrieval strategies

These are methods used to find and extract relevant documents or data fragments from a storage system.

Exact match retrieval

This is the traditional approach. The system looks for documents that contain exact word or phrase matches from the query.

Semantic search retrieval

The query is converted into a vector that compares to document embeddings using cosine similarity. The system returns content that is closest in meaning, not just wording.

RAG integrates external data retrieval with advanced text generation to deliver accurate, contextually enriched responses. It combines strategies to ensure that even complex queries yield precise and current answers.

Result ranking strategies

Once search results are retrieved, they need to be ranked. This matters for a few reasons: the context window (token limit) sent to the model is limited, longer prompts reduce answer quality, and not all results are useful. Ranking helps choose what’s most relevant.

Let’s use an example:

User query: How does artificial intelligence operate?

Search results:

- Document 1: “Artificial intelligence is a technology that mimics human thinking.”

- Document 2: “Robotics uses AI to automate processes.”

- Document 3: “Deep learning algorithms are part of artificial intelligence.”

The goal is to decide which results best match the query.

TF-IDF and BM25 (traditional methods)

TF (Term Frequency): the more often “artificial” or “intelligence” appears in a document, the more weight it gets.

IDF (Inverse Document Frequency): if a word appears everywhere, it’s less valuable. Unique terms are weighted more heavily.

Scores for the example:

- Document 1: High (includes both “artificial” and “intelligence”)

- Document 2: Low (missing “intelligence”)

- Document 3: Medium (includes keywords, but less precise)

Takeaway: TF-IDF works well with keyword presence, but doesn’t understand that “AI” means “artificial intelligence.”

BM25 is a widely used formula in information retrieval that builds on TF-IDF but considers additional factors. It is tailored for real-world search scenarios where it matters not only how many times a word appears, but also how short the document is and whether the word is repeated excessively.

BM25 enhances TF-IDF by including key factors. It considers the document length (favoring shorter documents) and keyword saturation (preventing overestimation from excessive repetition).

Scores for the example:

- Document 1: High (short, has exact match)

- Document 2: Low

- Document 3: High (short, close in topic)

Takeaway: BM25 works better when documents have useful language, not just keyword repetition.

Embedding similarity

Instead of looking for exact matches, text is converted into vectors, and similarity is calculated based on meaning.

Scores for the example:

- Document 1: High (very close in meaning)

- Document 2: Medium (“AI” is related to “artificial intelligence”)

- Document 3: High (“deep learning” is strongly connected to AI)

Takeaway: This method understands synonyms and context (for example, it maps “AI” to “artificial intelligence”). It works even when there’s no exact word match (for instance, “deep learning” is linked to AI).

Fine-tuned LLM ranking

This method uses a model specifically trained to rank search results. The training helps it identify which items are most relevant to the query.

Re-ranking (cross-encoders)

This is a variation of the previous approach. The system sends query-document pairs to the model for evaluation.

Example input:

- “Artificial intelligence is a technology that mimics human thinking.” — “How does AI operate?”

- “Robotics uses AI to automate processes.” — “How does artificial intelligence operate?”

- “Deep learning algorithms are part of artificial intelligence.” — “How does AI operate?”

The model then decides which document is the best match.

Response generation strategies

When generating a response to a user request, an LLM can adhere to different ways of operating, which differ from each other in the degree of abstractness and level of accuracy. Depending on the specific need of the interlocutor, the model chooses one of the following strategies:

Extractive summarization

The response is built by selecting the most relevant fragments from the retrieved documents. This approach is useful when accuracy and preserving the original meaning are a priority.

Example:

“According to document X, the answer to your question is…”

Abstractive summarization

The system rewrites the information in its own words. This helps condense and rephrase content, but can introduce mistakes or slight distortions.

Example:

“The main point of document X is that…”

Query-focused summarization

The reply takes the user’s question into account, not just the search results. This is useful when the answer depends on the specific context of the query.

Example:

“Based on the documentation in X, in your case, you should use…”

Where LLMs help — and where they fall short

Let’s look at where models make a real difference — and where they’re more of a distraction than a help.

When it makes sense to use LLMs

LLMs handle some tasks well, but they’re not a one-size-fits-all solution. Use them where their strengths matter:

- Content generation. LLMs are good at creating text, code, audio, or video. Their architecture is built to predict and complete sequences, which makes them effective here.

- Natural language processing. Tasks like summarizing, analyzing, or transforming text fit their strengths. They’re made to operate with language and find patterns within it.

- Tasks where precision isn’t critical. LLMs are useful for drafting ideas, prototyping, or getting a rough first pass. They’re not designed for perfect answers — treat output as a starting point, not a final result.

- Quick adaptation to unstructured text. LLMs perform well when used to extract insights or clean up data from unstructured sources like logs or webpages.

More context:

- In content generation, the quality of the prompt makes a significant difference. The output often needs editing before it’s usable.

- In natural language processing (NLP), LLMs can detect contextual connections and semantic nuances, making them useful for sentiment analysis and extracting key information.

- Models are useful for early drafts or brainstorming. However, in high-stakes fields such as medicine or law, leveraging LLMs without subsequent verification can lead to adverse consequences.

- LLMs can automate the processes of data collection and analyzing large volumes of information, saving time and resources.

When LLMs aren’t the right choice

There are areas where these systems simply don’t hold up:

- When exact accuracy is mandatory. LLMs sometimes “hallucinate” by generating outputs that seem right but aren’t. In finance, healthcare, or legal work, even small mistakes carry serious risk.

- When reasoning is required. LLMs can surface patterns but don’t grasp cause and effect. Logical chains, abstract concepts, or real understanding are still out of reach.

- When emotional intelligence matters. LLMs don’t have feelings. They don’t recognize tone, mood, or emotional cues, and they won’t build trust through empathy.

- When handling sensitive data. Leveraging LLMs raises privacy concerns. Inputs may be stored or used for training, which can pose risks depending on the context.

What we’ve learned, and what comes next

As you’ve seen throughout this article, LLMs have become powerful tools — they’re already reshaping how we work, create, and interact with information. From automating everyday tasks to powering advanced data analysis and content generation, their impact is real and growing. These systems unlock impressive capabilities, and we’re only beginning to see what’s possible.

But with great potential comes responsibility. LLMs still have their blind spots — they can make confident mistakes, miss the nuance behind a question, or surface privacy concerns. That’s why it’s so important to approach their use with care, clarity, and intention. Our goal here wasn’t just to explain how these systems work, but to help you think critically about when and how to use them. With the right mindset, you can unlock their value while avoiding the pitfalls, and we hope this guide brought you one step closer to doing just that.

in your mind?

Let’s communicate.